Nvidia continues to invest in AI initiatives and the most recent one, ChatRTX, is no exception thanks to its most recent update.

ChatRTX is, according to the tech giant, a “demo app that lets you personalize a GPT large language model (LLM) connected to your own content.” This content comprises your PC’s local documents, files, folders, etc., and essentially builds a custom AI chatbox from that information.

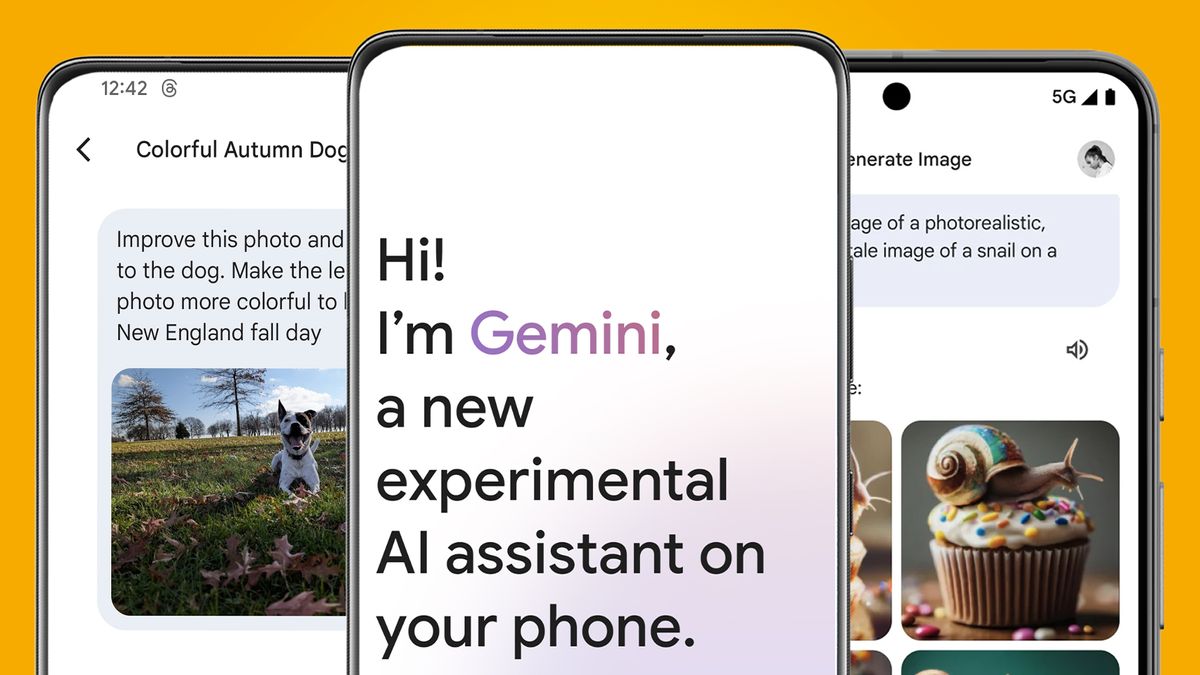

Because it doesn’t require an internet connection, it gives users speedy access to query answers that might be buried under all those computer files. With the latest update, it has access to even more data and LLMs including Google Gemma and ChatGLM3, an open, bilingual (English and Chinese) LLM. It also can locally search for photos, and has Whisper support, allowing users to converse with ChatRTX through an AI-automated speech recognition program.

Nvidia uses TensorRT-LLM software and RTX graphics cards to power ChatRTX’s AI. And because it’s local, it’s far more secure than online AI chatbots. You can download ChatRTX here to try it out for free.

Can AI escape its ethical dilemma?

The concept of an AI chatbot using local data off your PC, instead of training on (read: stealing) other people’s online works, is rather intriguing. It seems to solve the ethical dilemma of using copyrighted works without permission and hoarding it. It also seems to solve another long-term problem that’s plagued many a PC user — actually finding long-buried files in your file explorer, or at least the information trapped within it.

However, there’s the obvious question of how the extremely limited data pool could negatively impact the chatbot. Unless the user is particularly skilled at training AI, it could end up becoming a serious issue in the future. Of course, only using it to locate information on your PC is perfectly fine and most likely the proper use.

But the point of an AI chatbot is to have unique and meaningful conversations. Maybe there was a time in which we could have done that without the rampant theft, but corporations have powered their AI with stolen words from other sites and now it’s irrevocably tied.

Given that it’s highly unethical that data theft is the vital part of the process that allows you to make chats well-rounded enough not to get trapped in feedback loops, it’s possible that Nvidia could be the middle ground for generative AI. If fully developed, it could prove that we don’t need the ethical transgression to power and shape them, so here’s to hoping Nvidia can get it right.