OpenAI has unveiled an exciting new capability for its GPT-4o model, allowing users to fine-tune the AI to better align with specific project needs. Previously, GPT-4o, a more affordable version of the powerful GPT-4, could be guided through detailed prompts, but now users have the opportunity to fine-tune the model, refining its tone, style, and accuracy even further.

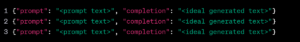

Fine-tuning, the process of making final adjustments to an AI model after its primary training, can significantly impact the output with minimal effort. OpenAI states that providing just a few dozen examples can be enough to shift the AI’s responses to better fit your desired use case. This makes the technology more adaptable to niche applications, whether you’re developing a chatbot, generating content, or automating complex queries.

For instance, if you’re creating a chatbot, you can supply several question-and-answer pairs that reflect the conversational style you’re aiming for. Once fine-tuning is complete, GPT-4o will produce responses that are more closely aligned with those examples, creating a more consistent and personalized interaction.

As an added incentive, OpenAI is offering 1 million free training tokens for fine-tuning until September 23, 2024. After this date, the cost will be $25 per million tokens for fine-tuning, with operational costs of $3.75 per million input tokens and $15 per million output tokens. Given that a million tokens represent a substantial amount of text, this pricing is designed to be accessible for a wide range of projects.

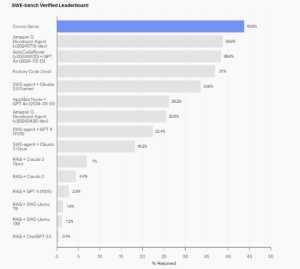

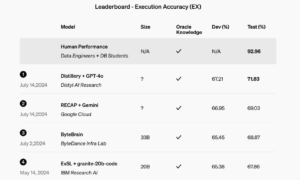

To showcase the power of fine-tuning, OpenAI partnered with developers like Cosine and Distyl. Cosine used fine-tuning to enhance its AI, Genie, making it more effective at debugging code. Distyl fine-tuned a text-to-SQL model, achieving a first-place score on the BIRD-SQL benchmark with an accuracy of 71.83%, demonstrating how targeted training can dramatically improve performance in specific tasks.

Privacy-conscious users can also rest assured. OpenAI guarantees full ownership of business data, including all inputs and outputs, when fine-tuning GPT-4o. The data used for training is kept private and is not shared or reused for training other models. However, OpenAI remains vigilant, monitoring for any misuse of the fine-tuning feature to ensure compliance with its usage policies.

This new fine-tuning option opens up a world of possibilities for developers and businesses alike, allowing for the creation of more tailored and effective AI-driven solutions. Whether you’re looking to enhance customer interactions, streamline workflows, or push the boundaries of AI capabilities, fine-tuning GPT-4o could be the key to unlocking your project’s full potential.